Reviewed this week:

⭐Hymba: A Hybrid-head Architecture for Small Language Models

When Precision Meets Position: BFloat16 Breaks Down RoPE in Long-Context Training

LLaVA-o1: Let Vision Language Models Reason Step-by-Step

⭐: Papers that I particularly recommend reading.

For Black Friday, I’m offering a 30% discount on the yearly subscription to The Kaitchup:

With this subscription, you get instant access to all the AI notebooks (120+) and all the articles and tutorials (200+).

The same discount also applies to The Salt:

These offers expire on November 30th.

New code repositories (list of all repositories):

⭐Hymba: A Hybrid-head Architecture for Small Language Models

State Space Models (SSMs), like Mamba and Mamba-2, are efficient with constant complexity and optimized hardware performance, so they can easily encode very long sequences but they struggle with memory recall tasks, leading to weaker performance on benchmarks.

Hymba is a new model architecture by NVIDIA that combines attention heads and SSM heads within the same layer. This parallel hybrid-head design allows the model to use the strengths of both attention (high-resolution memory recall) and SSMs (efficient context summarization). This integration increases flexibility and improves handling of different memory and information flow tasks. Hymba also introduces learnable meta tokens, prepended to input sequences, which interact with all tokens, acting as a compressed representation of knowledge and improving recall and general task performance.

This architecture is significantly different from other models combining SSMs and attention heads like Zamba and Jamba which exploit layers of either SSMs or Transformers but don’t combine them within the same layer.

Hymba uses shared KV caches between layers and sliding window attention for most layers to further reduce memory and computational costs.

Evaluations show that Hymba-1.5B surpasses larger models like Llama 3.2 3B on commonsense reasoning tasks with higher accuracy while being faster and requiring significantly smaller cache sizes. Instruction-tuned variants, such as Hymba-1.5B-Instruct, perform well on benchmarks like GSM8K and GPQA, often outperforming much larger models in accuracy and efficiency. Fine-tuning methods, like DoRA (another work by NVIDIA), further highlight Hymba's capability, achieving superior results on tasks like RoleBench compared to larger models like Llama-3.1-8B-Instruct.

When Precision Meets Position: BFloat16 Breaks Down RoPE in Long-Context Training

Rotary Position Embedding (RoPE) has become essential for extending context length, leveraging trigonometric and relative positional encoding to adapt models with minimal training. However, long-context training faces challenges, including high GPU memory requirements, even when using efficient techniques like BFloat16 to reduce memory consumption.

A critical issue with BFloat16 is its limited precision, which disrupts RoPE's relative positional encoding in long-context scenarios, especially as window sizes grow. This problem stems from accumulated numerical errors, resolved using Float32 precision. Analysis revealed that inconsistencies in the first token's position ID of the attention window contribute significantly to this breakdown, especially in standard attention mechanisms where tokens attend to all previous ones.

To address these challenges, the AnchorAttention method was introduced by the authors of this paper. It treats the first token as a shared anchor across all documents in a context window. It makes consistent position IDs and reduces numerical errors. By limiting tokens involved in attention computations, AnchorAttention prevents cumulative deviations and enables models to learn effectively from shorter sequences.

The authors released their code here:

GitHub: haonan3/AnchorContext

LLaVA-o1: Let Vision Language Models Reason Step-by-Step

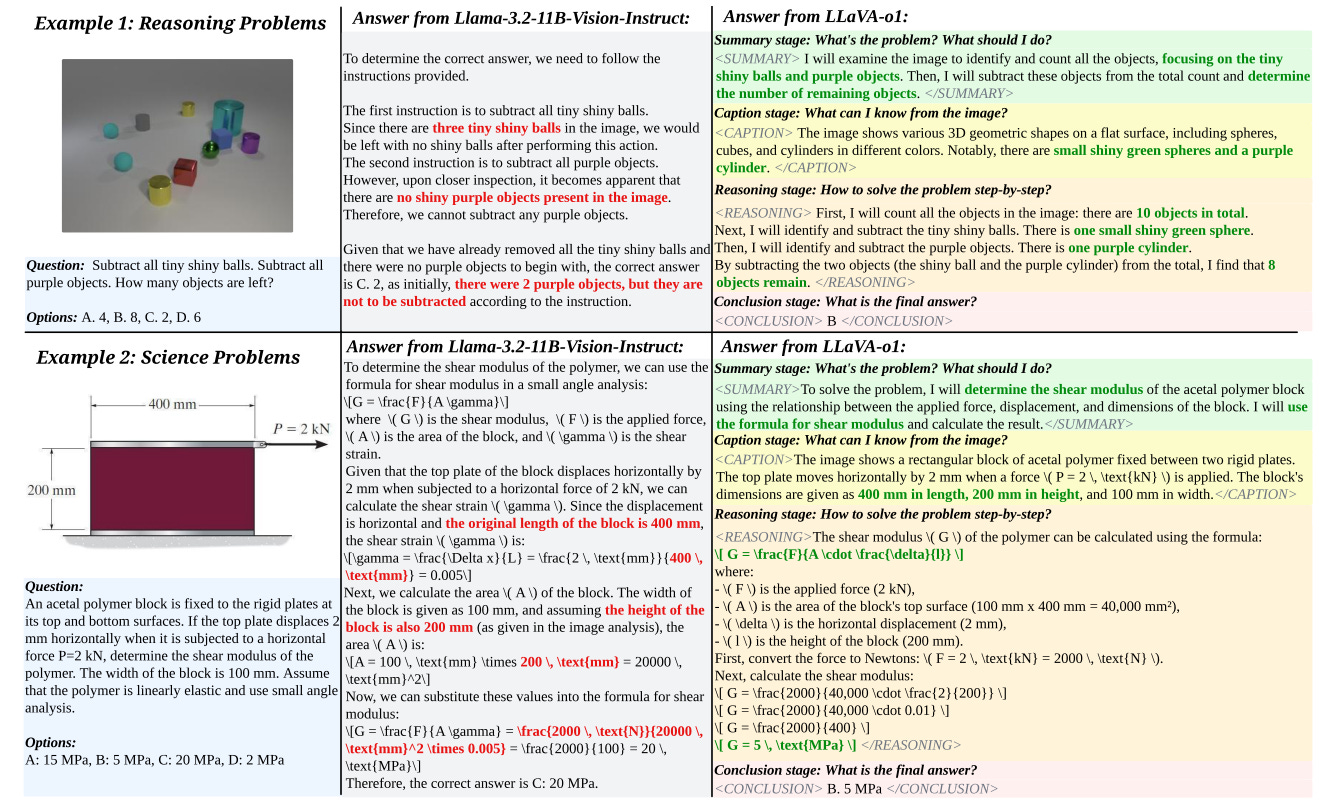

VLMs often rely on direct prediction, generating brief answers without structured reasoning. This approach struggles with tasks requiring logical analysis. Incorporating Chain-of-Thought (CoT) reasoning improves step-by-step reasoning but still leads to errors and hallucinations due to unstructured and inconsistent reasoning processes.

LLaVA-o1 introduces a systematic and structured reasoning approach, dividing its responses into four distinct stages: summary, caption (for image-related tasks), reasoning, and conclusion. Each stage is clearly tagged, helping the model maintain clarity and logic throughout the reasoning process. This method organizes problems, processes information systematically, and derives conclusions logically, avoiding premature or flawed reasoning paths.

The model is trained on a custom LLaVA-o1-100k dataset, generated with supervised fine-tuning using stage-based reasoning. It employs a stage-level beam search for inference, generating multiple candidate outputs at each stage to enhance accuracy and stability.

Experiments on multimodal reasoning benchmarks show LLaVA-o1 is good in complex tasks, outperforming standard CoT prompting and achieving scalable performance improvements with increased computational resources.

Note: The authors renamed it later LLaVA-CoT.