Reviewed this week:

⭐Differential Transformer

Falcon Mamba: The First Competitive Attention-free 7B Language Model

PrefixQuant: Static Quantization Beats Dynamic through Prefixed Outliers in LLMs

Pixtral 12B

⭐: Papers that I particularly recommend reading.

New code repositories (list of all repositories):

Get a subscription to The Salt, The Kaitchup, The Kaitchup’s book, and multiple other advantages by subscribing to The Kaitchup Pro:

The Transformer model, particularly its decoder-only variant, is standard in LLMs. However, Transformers struggle to focus attention on key information, often assigning considerable attention to irrelevant context, a problem known as "attention noise." To address this, the Differential Transformer (DIFF Transformer) introduces a differential attention mechanism that partitions attention vectors, computes separate attention maps, and subtracts them to cancel out noise. This method, similar to noise-canceling techniques in engineering, helps the model concentrate on essential information, improving retrieval accuracy.

Experiments demonstrate that the DIFF Transformer achieves better performance in retrieving relevant information than standard Transformers. It assigns higher attention scores to correct answers and lower scores to irrelevant context which is better to effectively handle longer sequences and larger contexts. Scaling tests show that DIFF Transformer requires only 65% of the parameters and training data compared to standard Transformers to achieve comparable performance, with additional advantages in reducing model "hallucinations" and improving in-context learning.

Overall, DIFF Transformer outperforms standard Transformers in language modeling and downstream tasks, and it reduces activation outliers.

Falcon Mamba: The First Competitive Attention-free 7B Language Model

LLMs are largely based on the Transformer architecture, whose attention mechanism offers strong performance but faces quadratic complexity with longer sequences. This has led researchers to explore alternative architectures, including efficient attention variants like FlashAttention and sliding window attention, as well as new non-Transformer models like Griffin, RWKV, and Mamba.

While promising, most non-Transformer models have yet to match the performance of optimized, Transformer-based LLMs at scale.

The Falcon Mamba 7B model, introduced in this report, represents the first successful pure Mamba-based architecture scaled for high performance as a State Space Language Model (SSM). Falcon Mamba 7B demonstrates results on par with or exceeding powerful Transformer-based models, such as Llama 3.1 8B and Mistral 7B, and outperforms other non-Transformer designs like RecurrentGemma and RWKV-based models. Notably, Falcon Mamba 7B’s architecture achieves constant memory use regardless of context length, providing highly efficient inference for tasks requiring very long-context data generation.

Unfortunately, I have found that Falcon Mamba and other models with alternative architecture are still far from easy to deploy in production. They are difficult to fine-tune and quantize without custom code.

PrefixQuant: Static Quantization Beats Dynamic through Prefixed Outliers in LLMs

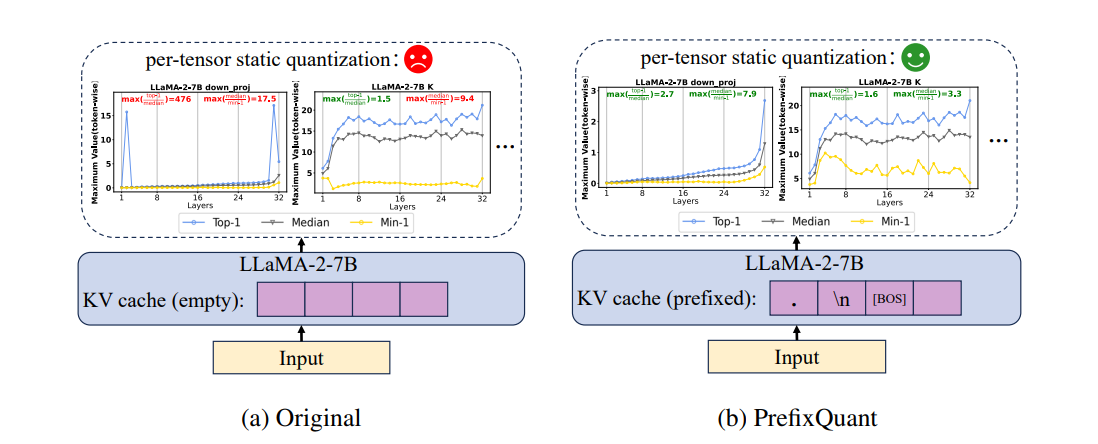

Quantization is a solution to reduce memory usage and accelerate inference in LLMs. However, quantization often struggles with large token activation outliers that disrupt model performance, creating significant accuracy loss and making standard per-tensor quantization ineffective. Existing methods, such as dynamic per-token quantization, can handle these outliers but add computational overhead and lack compatibility with certain optimizations.

PrefixQuant introduces a novel approach to solve this issue by pre-processing outlier tokens offline. Recognizing that outliers typically occur in predictable positions—such as initial tokens or low-value tokens like punctuation—PrefixQuant selectively "prefixes" these tokens in the KV cache. This process, done without retraining, minimizes outliers during inference. In benchmarks, PrefixQuant achieves significant performance gains over previous methods like QuaRot.

PrefixQuant also delivers substantial speed improvements. By eliminating token-wise outliers, it avoids the need for computationally intense dynamic quantization. It results in up to 2.81× speedup over FP16 models in end-to-end inference tests.

The authors released their code here:

GitHub: ChenMnZ/PrefixQuant

Pixtral 12B is a multimodal language model working with both images and text. Built for multimodal conversations, Pixtral is instruction-tuned on a large-scale dataset of interleaved image and text documents. Pixtral uses a ROPE-2D vision encoder, which processes images at their native resolution and aspect ratio. This flexible design enables Pixtral to work efficiently in latency-sensitive scenarios by processing low-resolution images, while switching to high-resolution for tasks requiring fine-grained analysis, maximizing both performance and adaptability.

Pixtral performs on par with, or better than, models like Qwen2-VL 7B and Llama-3.2 11B on multimodal benchmarks, including MMMU and MathVista. It even outperforms much larger models, such as Llama-3.2 90B, as well as closed models like Claude-3 Haiku and Gemini-1.5 Flash 8B.

Mistral AI also points out in their paper notable inconsistencies in multimodal evaluation protocols, which often lack standardization and use rigid exact-match metrics that penalize answers that are correct but not formatted identically to reference answers (e.g., “6” versus “6.0”). To address these issues, they introduced "Explicit" prompts specifying expected answer formats, along with flexible parsing techniques to improve fairness in scoring (which especially benefits Pixtral). They open-sourced this evaluation framework to support consistent assessment practices across models.

Furthermore, Mistral AI notes that existing multimodal benchmarks mostly focus on single-turn or multiple-choice question answering, which does not reflect the practical utility of models in real-world applications, such as multi-turn, long-form interactions. To bridge this gap, they introduced the MM-MT-Bench, a new multimodal, multi-turn benchmark that better evaluates conversational models.

If you are interested in using the model, I showed how to run Pixtral 12B with vLLM here:

If you have any questions about one of these papers, write them in the comments. I will answer them.