Reviewed this week

Tokenization Falling Short: The Curse of Tokenization

Mixture of Scales: Memory-Efficient Token-Adaptive Binarization for Large Language Models

⭐Instruction Pre-Training: Language Models are Supervised Multitask Learners

A Tale of Trust and Accuracy: Base vs. Instruct LLMs in RAG Systems

⭐: Papers that I particularly recommend reading.

New code repositories:

I maintain a curated list of AI code repositories here:

Tokenization Falling Short: The Curse of Tokenization

This work studied the limitations of current tokenization methods and their impact on LLM performance. They explored three areas: complex problem solving, token structure probing, and typographical variation.

The paper investigates LLM performance on tokenization-sensitive tasks such as anagram solving and complex mathematical language understanding, probed tasks involving case manipulation and length counting to assess token structural understanding, and developed evaluation benchmarks (TKEval) to systematically test LLMs’ resilience to tokenization.

The findings highlight that while scaling model parameters can enhance the robustness of tokenization, LLMs still suffer from biases introduced by typographical errors and text format variations.

The study provides an analysis of tokenization issues, demonstrating that even state-of-the-art models like Llama 3, Mistral, and GPT-4 struggle with handling typographical variations, particularly at the character level, and shows that regularized tokenization approaches, such as BPE-dropout with moderate drop rates, can improve model resilience.

Mixture of Scales: Memory-Efficient Token-Adaptive Binarization for Large Language Models

This paper introduces a novel binarization technique called Mixture of Scales (BinaryMoS).

Traditional binarization methods use scaling factors to manage the effective values of binarized weights. While these scaling factors constitute only a small portion of the overall model size, they play a critical role in minimizing binarization error.

BinaryMoS improves these scaling factors by incorporating token-adaptive scaling factors. Drawing inspiration from the Mixture of Experts (MoE) approach, which uses multiple expert layers to boost model capacity, BinaryMoS employs multiple scaling factors as experts to enhance the representational capacity of binarized LLMs in a memory-efficient manner.

During inference, BinaryMoS combines these scaling experts linearly based on the context, generating token-adaptive scaling factors that dynamically adjust the represented values of binarized weights, thereby maximizing the model's expressive power. Consequently, BinaryMoS improves the linguistic performance of binarized LLMs with minimal memory overhead.

⭐Instruction Pre-Training: Language Models are Supervised Multitask Learners

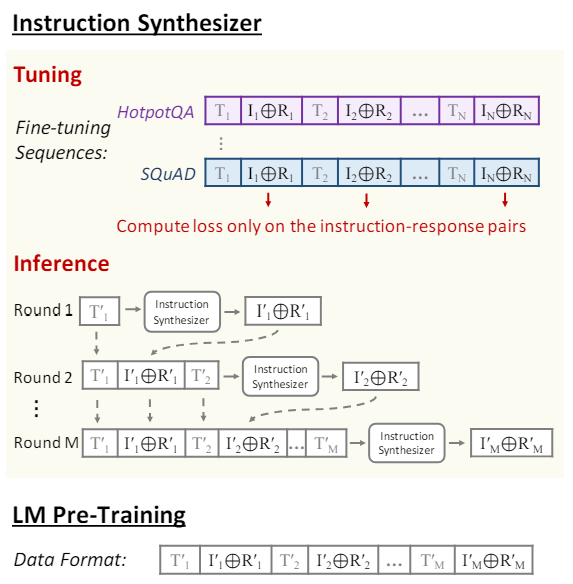

In this paper, Instruction Pre-Training is introduced to explore supervised multitask learning for pre-training. Instead of directly pre-training on raw corpora, Instruction Pre-Training augments each raw text with instruction-response pairs generated by an instruction synthesizer.

To develop the instruction synthesizer, various existing datasets are converted into the required format: each example includes instruction-response pairs and the corresponding raw text. This data collection is used to fine-tune a language model to generate instruction-response pairs based on the raw text.

The diversity in the tuning data helps the synthesizer generalize to unseen data. Unlike other works using large or closed-source models, this approach uses open-source models with around 7 billion parameters, making it more cost-effective. This efficiency allows for the augmentation of raw corpora with 200 million instruction-response pairs across over 40 task categories.

Experiments were conducted in both general pre-training from scratch and domain-adaptive continual pre-training. In pre-training from scratch, a 500 million parameter model pre-trained on 100 billion tokens performed as well as a 1 billion parameter model pre-trained on 300 billion tokens. Moreover, models that underwent Instruction Pre-Training benefited more from further instruction tuning. In continual pre-training, Instruction Pre-Training consistently improved the performance of Llama3-8B on finance and biomedicine domains, making it comparable to or even surpassing Llama3-70B.

The instruction synthesizer is released on GitHub:

microsoft/LMOps: instruction_pretrain

A Tale of Trust and Accuracy: Base vs. Instruct LLMs in RAG Systems

Pre-training an LLM with large text datasets equips it with a comprehensive understanding of language, syntax, semantics, and general knowledge, forming what is the "base" version.

After pre-training, LLMs typically undergo two further refinement stages to improve their performance and usability, resulting in what is known as the "instruct" version. The first stage is supervised instruction fine-tuning (SFT), where the model is trained on curated datasets containing instructions paired with expected answers. The second stage involves aligning the model with human preferences through methods like reinforcement learning from human feedback (RLHF) or DPO. In this phase, human evaluators provide feedback on the model's outputs, which are then fine-tuned to ensure the responses are accurate, contextually appropriate, and aligned with human values.

These instruct models often include specific usage guidelines.

In this paper by Microsoft and Tsinghua University, an evaluation was conducted comparing instruct models against base models for RAG.

Interestingly they found that the base models, without the additional fine-tuning, outperformed the instruct models in RAG tasks.

This finding challenges the assumption that instruct models are superior for RAG tasks.

If you have any questions about one of these papers, write them in the comments. I will answer them.