This week, we read:

⭐LIMO: Less is More for Reasoning

Demystifying Long Chain-of-Thought Reasoning in LLMs

UltraIF: Advancing Instruction Following from the Wild

⭐: Papers that I particularly recommend reading.

New code repositories (list of all repositories):

⭐LIMO: Less is More for Reasoning

This paper looks at how LLMs learn to reason, particularly in areas like math and programming, and challenges the common belief that they need massive amounts of training data to do so. Modern LLMs already contain a huge amount of mathematical and logical knowledge from their pre-training data. Instead of teaching them new concepts from scratch, the challenge is now about getting them to use what they already know. Newer models can generate longer reasoning chains at inference time, which helps them break down complex problems more effectively. This suggests that instead of flooding models with endless training data, a smarter approach might be to give them just a few high-quality examples that encourage deeper thinking.

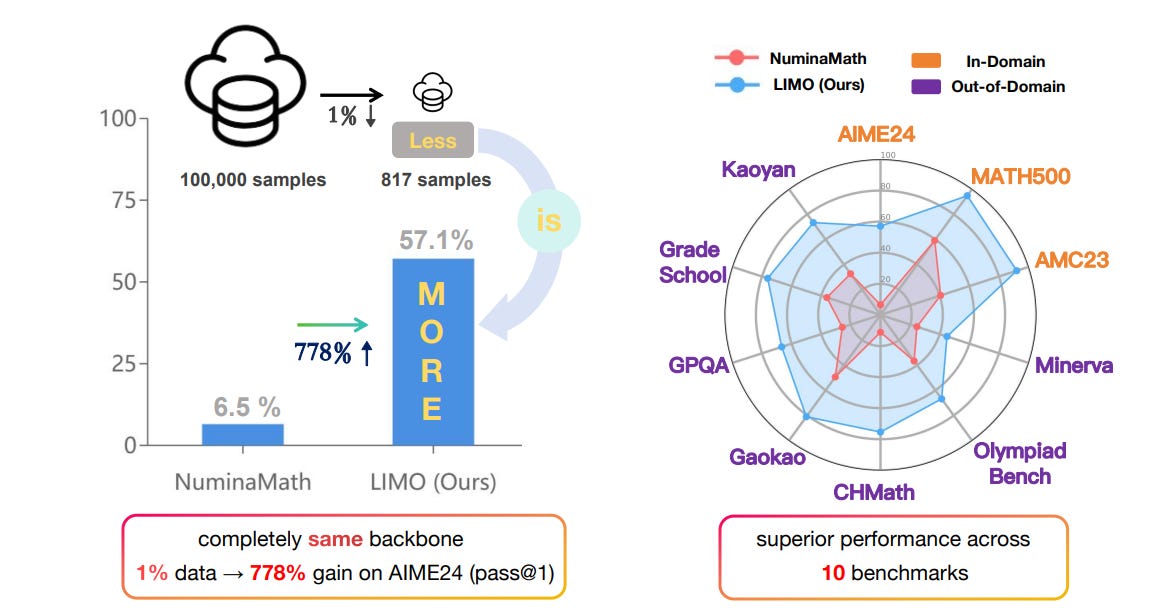

Based on this idea, the authors propose the Less-Is-More Reasoning (LIMO) Hypothesis. They suggest that LLMs don’t need massive fine-tuning to develop strong reasoning skills—what really matters is whether they already have the necessary knowledge from pre-training and whether they’re given examples that show them how to apply it in a structured way. To test this, they trained a model on just 817 carefully chosen examples and found that it outperformed models trained on 100 times more data.

This finding is a big deal because it suggests that even the most complex reasoning abilities might not require enormous datasets, just the right kind of training samples. If a model has enough pre-trained knowledge and gets the right kind of reasoning examples, it might be able to tackle complex problems with minimal additional training.

To support further research, the authors are releasing their fine-tuned models, training code, evaluation pipelines, and datasets to help others explore the potential of data-efficient reasoning in AI:

GitHub: GAIR-NLP/LIMO

Demystifying Long Chain-of-Thought Reasoning in LLMs

This paper examines how LLMs handle long chain-of-thought (CoT) reasoning and the challenges of making it work at scale. While LLMs are already pretty good at things like math and coding, they still struggle with really tough problems, such as advanced math competitions, high-level science questions, and software engineering tasks.

The researchers tested supervised fine-tuning (SFT) to help models generate longer reasoning steps. They found that training models this way improves their performance and makes reinforcement learning (RL) adjustments easier. However, RL doesn’t always expand CoT reasoning in a stable way. To fix this, they introduced a special reward system that encourages models to grow their reasoning chains while avoiding repetitive or unhelpful steps.

One big challenge with training models on long reasoning chains is finding reliable feedback signals. Good-quality training data is hard to come by, so the researchers experimented with using noisy, web-extracted solutions as a kind of “silver” supervision. Even though these sources aren’t perfect, they found that with the right filtering and training techniques, the models still improved, especially in areas like STEM problem-solving.

The study also analyzes where long CoT abilities come from and how RL can shape them. It turns out that base models already have some core reasoning skills, like branching ideas and checking for mistakes, but they need the right training incentives to use them effectively.

The authors released their code here:

GitHub: eddycmu/demystify-long-cot

UltraIF: Advancing Instruction Following from the Wild

The paper introduces ULTRAIF, a scalable method for generating high-quality instruction-following data for LLM. Previous approaches to creating instruction datasets either rely on human annotators, which are costly and difficult to scale or use LLM-driven evolution, which often compromises diversity and correctness.

ULTRAIF addresses these limitations by leveraging real-world user instructions to create a more structured approach. By breaking down complex instructions into simpler components and generating corresponding evaluation questions, the method ensures both diversity and response accuracy. Instead of relying on rigid handcrafted constraints, ULTRAIF trains a composer model, UltraComposer, to synthesize instructions with verifiable conditions, improving the generalization ability of language models.

ULTRAIF seems to significantly improve instruction-following performance across various domains, including mathematics, reasoning, coding, and general conversation. By applying this method to Llama 3.1 8B (base model), the authors successfully optimize its capabilities to match the instruction-following proficiency of its Instruct version with a relatively small dataset.