Reviewed this week:

⭐Instruction Following without Instruction Tuning

Discovering the Gems in Early Layers: Accelerating Long-Context LLMs with 1000x Input Token Reduction

VPTQ: Extreme Low-bit Vector Post-Training Quantization for Large Language Models

Making Text Embedders Few-Shot Learners

⭐: Papers that I particularly recommend reading.

New code repositories:

Get a subscription to The Salt, The Kaitchup, The Kaitchup’s book, and multiple other advantages by subscribing to The Kaitchup Pro:

⭐Instruction Following without Instruction Tuning

This paper explores the concept of instruction following in language models, typically achieved through instruction tuning, where models are fine-tuned on paired examples of instructions and responses. Previous research demonstrated that instruction tuning is highly sample-efficient, requiring as few as 1,000 instruction-response pairs. However, this work investigates whether instruction following can emerge implicitly through methods not explicitly designed for it.

The study examines two forms of adaptation that perform implicit instruction tuning: (1) response tuning, where models are trained only on responses without their paired instructions, and (2) single-task finetuning, where models are trained on data from a narrow domain, such as poetry generation or Python code generation.

Key findings include:

Response Tuning: Training on responses alone still yields instruction-following behavior. For example, response-tuned models performed well, winning 43% of the time against instruction-tuned models in evaluation, suggesting that pretraining already encodes an implicit understanding of instruction-response mappings.

Single-Task Finetuning: Models finetuned on narrow-domain tasks, such as generating poetry or code, also exhibit general instruction-following abilities. For instance, a poetry-tuned model generated a variety of responses beyond poetry, winning 23.7% of the time against an instruction-tuned model in tests.

These observations imply that the ability to follow instructions might be inherent to language models, even when they are adapted for specific tasks.

To understand why instruction following arises from such adaptations, the authors conducted experiments using a simple rule-based system. They found that applying three basic rules—slowly increasing the probability of ending sequences, modifying the likelihood of a few tokens, and penalizing repetition—enabled the model to exhibit instruction-following behavior. This rule-based model performed significantly better than the base model in head-to-head comparisons.

This paper discusses advancements in optimizing LLMs for processing long-context inputs, which is a critical requirement for many AI applications.

The paper introduces a new method called GemFilter, which aims to accelerate LLM generation speed and reduce GPU memory usage.

LLMs rely on the KV cache for efficient text generation, storing intermediate attention keys and values during the prompt computation phase. While existing methods like H2O and SnapKV effectively compress this KV cache during the iterative generation phase, they do not address the efficiency of the initial prompt computation phase, which becomes a bottleneck as input length increases.

Key Observations and Contributions:

Identifying Relevant Tokens Early: The authors found that LLMs can identify necessary information for a query in the early layers of the model, known as "filter layers." This insight reveals that relevant tokens for answering a query are often recognized early in the process. For example, Llama 3.1 was able to distill essential information within its 13th to 19th layers, enabling the compression of input tokens (e.g., from 128K to just 100 tokens).

GemFilter Method: Based on this observation, the authors developed GemFilter, which leverages early filter layers to select and compress the input tokens into a smaller subset for the full model inference. This results in reduced time and GPU memory usage during the prompt computation phase.

Performance: GemFilter achieved a 2.4× speedup and 30% reduction in GPU memory consumption compared to state-of-the-art methods like SnapKV, outperforming them on the Needle in a Haystack benchmark and performing comparably on the LongBench benchmark.

They released their code here:

GitHub: SalesforceAIResearch/GemFilter

VPTQ: Extreme Low-bit Vector Post-Training Quantization for Large Language Models

This paper by Microsoft discusses weight-only quantization methods for LLMs, focusing primarily on Post-Training Quantization (PTQ) and the more recent Vector Quantization (VQ).

PTQ directly quantizes model weights without retraining, often using scalar quantization, which converts individual weights to lower-bit fixed-point numbers. Recent methods have achieved near-original accuracy with 3-4 bit quantization, but scalar quantization struggles with extreme low-bit levels due to limited numerical representation, such as 2-bit quantization.

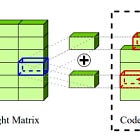

In contrast, VQ maps high-dimensional weight vectors to predefined lower-dimensional vectors using codebooks, allowing for more efficient data compression by exploiting interdependencies across weight dimensions. Despite this, VQ faces challenges with accuracy, efficient execution, and dequantization overhead. For example, methods like GPTVQ encounter increased quantization errors as vector length increases, while others, like AQLM, experience slow training convergence due to gradient estimation requirements.

To address these limitations, the paper introduces Vector Post-Training Quantization (VPTQ), which offers improved accuracy, efficiency, and reduced dequantization overhead for extreme low-bit quantization in LLMs. VPTQ uses Second-Order Optimization to achieve state-of-the-art results, reducing quantization errors significantly compared to other methods and improving inference throughput by 1.6-1.8 times.

They released their code here:

GitHub: microsoft/VPTQ

For now, the quantization code isn’t published but one of the authors told me that they will release it soon.

Making Text Embedders Few-Shot Learners

This paper discusses LLMs for generating text embeddings, which are vector representations that capture the semantic and contextual meaning of natural language text. These embeddings are crucial for a range of NLP tasks, such as information retrieval, text classification, item recommendation, and question answering.

Embedding models have been based on bidirectional encoder and encoder-decoder architectures, which produce high-quality text embeddings due to extensive pre-training. However, recent developments have shifted toward decoder-only architectures, which have shown improvements in accuracy and generalization, especially with supervised learning. Despite this, embedding models often struggle with unseen task instructions and complex retrieval tasks due to the narrow range of instructions encountered during training.

Key Points of the paper:

In-Context Learning (ICL): The study leverages the in-context learning capabilities of LLMs, which allow models to adapt to new and complex tasks by incorporating task-specific examples directly into input prompts. This approach enables LLMs to generate embeddings that are more adaptable and relevant to various contexts without additional training.

Proposed Approach - bge-en-icl: The researchers introduce a model, bge-en-icl, which enhances query embeddings by incorporating few-shot examples into the query prompt, utilizing the ICL capabilities of LLMs. This integration allows the model to generate more generalizable embeddings across different domains.

Simplicity over Complexity: The study evaluates different architectural modifications, such as changes to attention mechanisms and pooling methods, and finds that complex modifications do not significantly improve performance. Instead, the original, unmodified architecture, combined with the ICL strategy, yields the best results.

The authors released their code here:

GitHub: FlagOpen/FlagEmbedding

If you have any questions about one of these papers, write them in the comments. I will answer them.