This week, we read:

⭐70% Size, 100% Accuracy: Lossless LLM Compression for Efficient GPU Inference via Dynamic-Length Float

Could Thinking Multilingually Empower LLM Reasoning?

SFT or RL? An Early Investigation into Training R1-Like Reasoning Large Vision-Language Models

⭐: Papers that I particularly recommend reading.

New code repositories (list of all repositories):

Could Thinking Multilingually Empower LLM Reasoning?

This paper looks into how using multiple languages can improve the reasoning abilities of LLMs. Even though these models are trained on a wide variety of languages, English tends to dominate because there’s much more English data involved. That means models usually perform better when tasks are in English, especially when it comes to more complex reasoning problems.

However, recent work shows that some models actually do better in other languages, including ones that aren’t typically seen as high-resource. So, what happens when you combine model responses across multiple languages?

The authors of this paper tested this using two reasoning-focused datasets, translated into 17 different languages, and found that mixing in answers from multiple languages gave a big performance boost. One task jumped from around 45 percent accuracy to 90 percent, and another came close to perfect.

They made sure the improvement wasn’t just due to random chance or different ways of phrasing the questions. Even with just a few languages, the multilingual approach started to outperform English-only runs. It didn’t seem to matter much which specific languages were used, and human versus machine translations didn’t change the outcome either.

The code to reproduce their evaluation has been released here:

GitHub: CONE-MT/multilingual_reasoning

⭐70% Size, 100% Accuracy: Lossless LLM Compression for Efficient GPU Inference via Dynamic-Length Float

While effective in reducing resource usage, quantization is a lossy technique, meaning it alters the model’s output to some degree and can negatively affect accuracy.

Lossless compression, on the other hand, keeps the model outputs exactly the same by preserving the original weights. While this is ideal in terms of maintaining performance, existing lossless methods mostly focus on storage benefits or niche hardware setups and aren’t well-suited for efficient inference on standard GPUs.

The authors point out that the BFloat16 format, often used to store weights in LLMs, is not very efficient, especially in how it uses the exponent bits. After analyzing how these bits are distributed in practice, they found there’s a lot of unused information, which opens the door to lossless compression. Using entropy coding techniques like Huffman coding, they show it’s possible to reduce model size by around 30 percent without losing any information.

However, decoding compressed weights during inference becomes a bottleneck, since methods like Huffman decoding don’t align well with how GPUs work. GPUs are optimized for parallel tasks, but standard decoding is sequential and slow, leading to poor hardware utilization.

To solve this, the paper introduces a new format called Dynamic-Length Float (DFloat11), which compresses weights to about 11 bits while preserving full accuracy. They also develop a GPU-optimized kernel for decompressing these weights efficiently. The design uses compact lookup tables stored in shared memory, a method for calculating thread-specific read and write positions, and batch-level decompression to maximize GPU usage.

This is a very impressive work! And the code is available here:

GitHub: LeanModels/DFloat11 (Apache 2.0 license)

SFT or RL? An Early Investigation into Training R1-Like Reasoning Large Vision-Language Models

This work explores whether the standard training approach for large vision-language models (VLMs), first supervised fine-tuning (SFT), then reinforcement learning (RL), actually helps when it comes to complex multimodal reasoning.

The authors introduce a new dataset called VLAA-Thinking, which is designed specifically for training and testing reasoning in image-text tasks. The dataset includes detailed step-by-step reasoning traces and is split into parts tailored for both SFT and RL, with examples that either guide models through reasoning or push them to figure things out more independently.

They use a multi-step process to build the dataset, starting with image captions and questions, generating reasoning paths with DeepSeek-R1, rewriting those for clarity, and then verifying them with a GPT-based model to ensure quality.

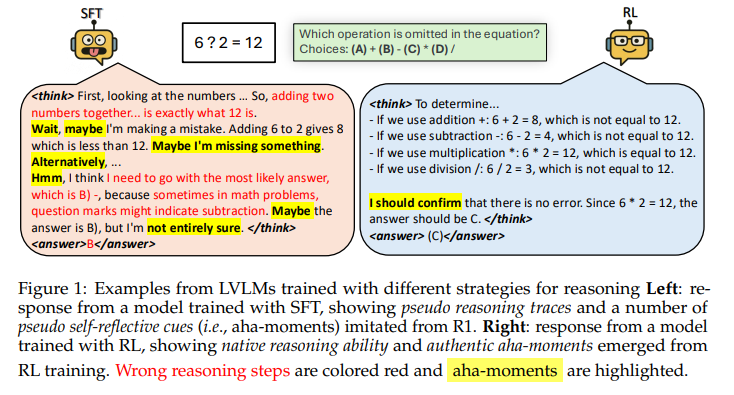

The authors then run experiments to understand what SFT and RL each contribute. They find that SFT alone improves performance on simpler tasks but falls short on harder reasoning, as expected. It often leads to surface-level, imitation-based answers, which can actually hurt performance, especially in more complex models. RL, on the other hand, encourages more flexible and genuinely thoughtful responses, especially when trained with carefully designed rewards that account for both correct answers and thoughtful reasoning.

Interestingly, combining SFT and RL didn’t always help. In some cases, starting with SFT made the model worse when it moved on to RL, showing that imitation-first training can interfere with deeper learning from reward signals. This effect was seen even in large models, suggesting the issue isn’t fixed by just scaling up.

In the end, the study finds that skipping SFT and training directly with RL tends to produce better reasoning in multimodal models.