Reviewed this week:

⭐BitNet a4.8: 4-bit Activations for 1-bit LLMs

Self-Consistency Preference Optimization

"Give Me BF16 or Give Me Death"? Accuracy-Performance Trade-Offs in LLM Quantization

NeuZip: Memory-Efficient Training and Inference with Dynamic Compression of Neural Networks

⭐: Papers that I particularly recommend reading.

New code repositories (list of all repositories):

⭐BitNet a4.8: 4-bit Activations for 1-bit LLMs

Recent studies reveal that 1-bit LLMs can achieve similar performance to full-precision models. These low-bit models reduce costs related to memory, energy, and processing time.

I recently wrote about “1-bit” LLMs for very efficient inference on the CPU.

This new paper by Microsoft goes a bit further with ternary LLMs by quantizing the activations.

With 1-bit LLMs, the challenge shifts from memory limitations to higher computational demands. To address this, techniques like activation sparsity and quantization help lower the processing load. Sparsity removes low-value activations, focusing computation on only the most important parts of the data. This approach is particularly useful for handling long-tailed distributions, where many activations contribute little to the final result.

Alongside sparsity, quantization further reduces computational needs by lowering the bitwidth of activations. Yet, very low-bit activations can cause errors when certain high-value "outliers" skew results. While some techniques handle outliers in higher-precision models, applying them to 1-bit models is challenging because they add computational complexity.

BitNet a4.8, proposed by this paper, combines both quantization and sparsity to optimize 1-bit LLMs. It selectively uses 4-bit activations for input layers and 8-bit sparsification for intermediate layers. Training BitNet a4.8 involves a two-stage process, moving from 8-bit to 4-bit activations. This transition requires minimal data.

Experiments show that BitNet a4.8 performs on par with other low-bit models while being more efficient at inference time.

Self-Consistency Preference Optimization

Training LLMs on human-labeled data has improved their accuracy across tasks but remains limited by the high cost and time required to gather quality data. To overcome this, recent methods rely on self-training, where models generate their own data. However, when models try to evaluate their own responses, they often struggle with complex tasks that have clear, correct answers.

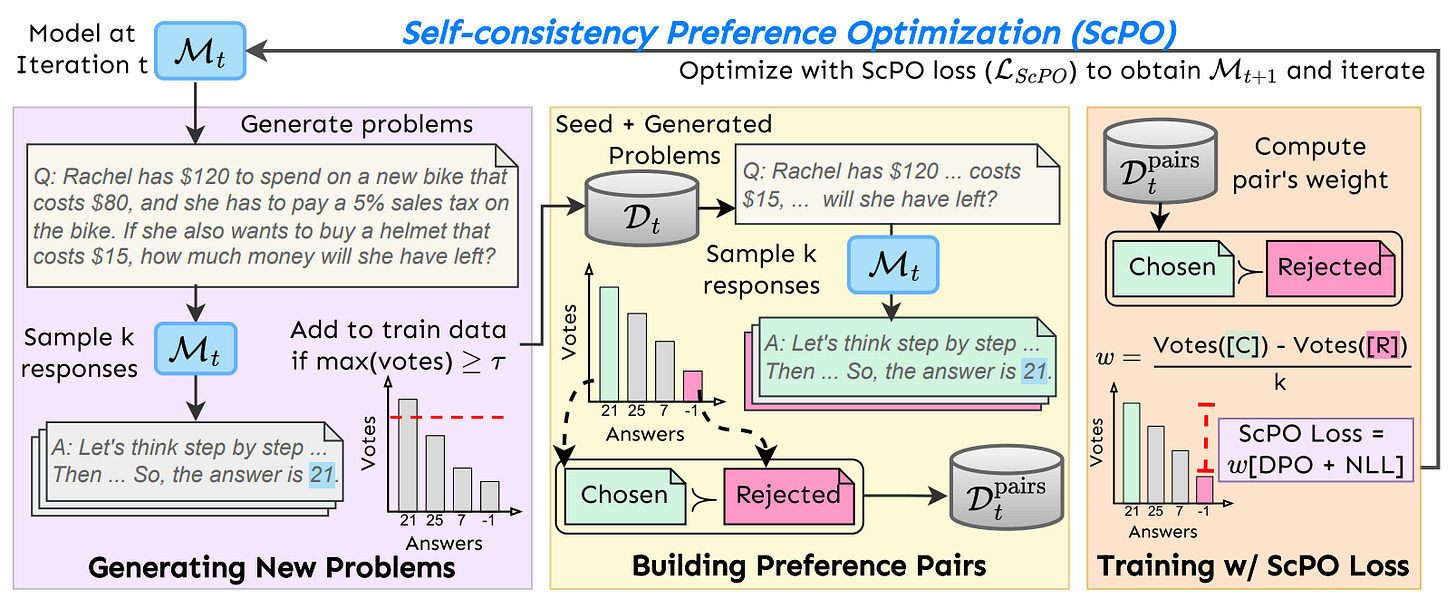

To solve this, Self-consistency Preference Optimization (SCPO) trains LLMs for difficult tasks without needing labeled answers. SCPO uses a self-consistency approach: the model generates several answers and picks the most frequent one, assuming this is likely correct. SCPO then forms preference pairs by comparing the most and least consistent answers and then refines the model based on its confidence in these comparisons. A semi-supervised SCPO version also integrates human-labeled data when available.

In tests with Llama 3 8B, SCPO improved accuracy by 22.74% and 5.26% on the GSM8K and MATH datasets. It approaches the accuracy of supervised models. When trained with both labeled data and model-generated questions, semi-supervised SCPO exceeded supervised performance by 2.35% on GSM8K. SCPO also boosted accuracy on challenging logic puzzles.

"Give Me BF16 or Give Me Death"? Accuracy-Performance Trade-Offs in LLM Quantization

The high costs of running LLMs have led to various methods for speeding up inference, including quantization, speculative decoding, and model pruning. Quantization, which reduces the bitwidth of model weights and, sometimes, activations, is a common approach to cut memory and processing demands. However, there is often a trade-off between the memory and speed benefits of quantization and the model’s accuracy, with few guidelines available on performance expectations across different compression levels.

This study focuses on practical quantization formats with low accuracy loss and efficient computational support. It evaluates 8-bit and 4-bit quantization formats using the vLLM inference engine across various GPU types and tasks.

Key findings include:

W8A8-FP Quantization: Nearly maintains the accuracy of the original model across all benchmarks, using dynamic per-token quantization and round-to-nearest weight assignment.

W8A8-INT Quantization: Shows a minor accuracy loss of 1-3% per task, performing better than previous reports of higher accuracy drops.

W4A16-INT Quantization: Maintains low accuracy loss and is competitive with W8A8-INT, showing strong performance in real-world tasks.

Text Generation Analysis: Large quantized models generally retain the original model’s word choices and structure, while smaller models show slight variability but preserve meaning.

Inference Performance: W4A16-INT works best for synchronous deployment of small models on mid-range GPUs, while W8A8 is more suitable for mid-to-large models on high-end GPUs.

NeuZip: Memory-Efficient Training and Inference with Dynamic Compression of Neural Networks

Note: I found this paper by reading this very good thread on X discussing LoRA vs. full fine-tuning performance.

NeuZip provides a method to compress neural networks without compromising model flexibility or precision. It achieves this by decomposing the floating-point representations of model parameters into three components: the sign bit, exponent bits, and mantissa bits.

NeuZip leverages the low entropy of the exponent bits and selectively compresses them using the asymmetric numeral system (ANS). This lossless compression technique operates efficiently on GPUs. They show it reduces memory usage without any loss of precision.

For inference, NeuZip introduces a lossy compression variant that achieves additional memory savings by focusing on the most significant bits of the mantissa. By retaining only the top-k significant bits, it minimizes memory costs without much performance degradation.

The authors released their code here:

GitHub: BorealisAI/neuzip