Qwen2-VL: How Does It Work?

One of the best VLMs for image captioning, visual question answering, optical character recognition (OCR), and multimodal chat.

Alibaba’s Qwen2-VL models are state-of-the-art vision-language models (VLMs) available in three sizes: 2B, 7B, and 72B parameters. These advanced generative language models support multimodal inputs, including text, single or multiple images, and even 20-minute-long videos.

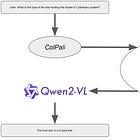

Qwen2-VL models currently excel as the top open-source VLMs for various tasks such as image captioning, visual question answering, optical character recognition (OCR), and multimodal chat. Additionally, they have demonstrated impressive performance in multimodal retrieval-augmented generation (RAG) systems, making them exceptionally versatile in handling complex, multimodal data.

In this article, we will review the Qwen2-VL architecture and training to gain a deeper understanding of what makes it so effective. We'll explore, in plain English, the new techniques introduced by Qwen2-VL that improve its ability to encode complex documents, such as images with dense text and videos.