This week, we review:

⭐Taming LLMs by Scaling Learning Rates with Gradient Grouping

Are Reasoning Models More Prone to Hallucination?

On-Policy RL with Optimal Reward Baseline

⭐: Papers that I particularly recommend reading.

New code repositories (list of all repositories):

⭐Taming LLMs by Scaling Learning Rates with Gradient Grouping

This work tackles the trade-off between memory efficiency and optimization performance in LLM training. Adaptive optimizers like Adam have been central to deep learning, especially for training LLMs. However, their use of per-parameter statistics incurs heavy memory costs, limiting scalability, particularly in low-resource settings. For each parameter, two optimizer parameters are created and stored in memory, i.e., the AdamW optimizer consumes more memory than the model itself. While approaches like parameter-efficient fine-tuning (PEFT) and state compression offer partial relief, they often rely on heuristics that are not consistently effective. Quantization tends to perform well, and I never saw in practice a significant difference between 32-bit and 8-bit AdamW.

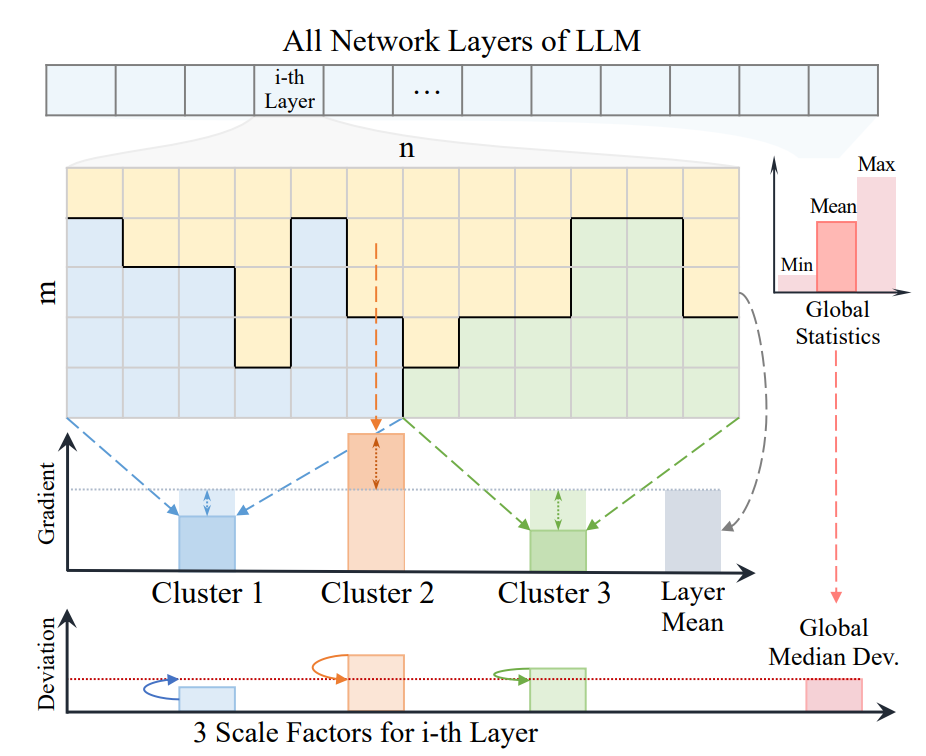

In this work, the authors propose Scaling with Gradient Grouping (SGG), an optimizer wrapper that clusters parameters based on gradient statistics within each layer and applies group-specific learning rate scaling. Unlike prior work that replaces parameter-level control with fixed group assignments, SGG dynamically groups parameters during training while preserving some degree of parameter-wise adaptability. This hybrid approach exploits the internal regularities observed across different model layers, such as shared optimization behaviors in attention and MLP blocks, and improves generalization and convergence speed.

SGG integrates smoothly with existing optimizers and training pipelines without requiring architectural changes. Surprisingly, in the experiments conducted by the author, it enables low-rank pretraining to achieve performance comparable to full-rank training.

This is interesting work, but I’m skeptical about the results. When LoRA outperforms full training, as in many work presenting LoRA improvements, it always means that the full training’s hyperparameters are suboptimal or that the evaluation pipeline had some issues.

Are Reasoning Models More Prone to Hallucination?

This paper investigates whether reasoning-oriented large language models (LRMs), developed through post-training phases to enhance long-form chain-of-thought (CoT) reasoning, are more prone to factual hallucinations. While these models, trained via supervised fine-tuning (SFT), reinforcement learning (RL), or both, excel at formal reasoning tasks like math and logic, their performance on fact-seeking tasks (e.g., SimpleQA and TriviaQA) varies significantly.

The study finds that many LRMs actually perform worse than their base models in terms of factual accuracy, especially those trained using only SFT or only RL. These models tend to exhibit specific flawed cognitive behaviors: flaw repetition, where they repeatedly pursue a flawed reasoning path, and think-answer mismatch, where their final answers don’t align with their reasoning. In contrast, models trained with both cold-start SFT and verifiable-reward RL show improved factuality and fewer hallucinations.

The authors further identify a key mechanism behind hallucination: miscalibrated internal uncertainty. RL-only and SFT-only LRMs often show poor alignment between predicted confidence and correctness, even losing uncertainty signals in their hidden states. Models trained from a full cold-start pipeline retain better calibration and factual consistency.

They published their code for evaluation here:

GitHub: THU-KEG/LRM-FactEval

On-Policy RL with Optimal Reward Baseline

This paper introduces On-Policy RL with Optimal reward baseline (OPO), a reinforcement learning algorithm designed to improve the stability and efficiency of aligning LLMs with human preferences.

While RLHF, especially with Proximal Policy Optimization (PPO), has been instrumental in enhancing both alignment and reasoning abilities of LLMs, it comes with drawbacks. PPO's reliance on auxiliary components like value models and its tendency toward large policy shifts can reduce sample diversity and lead to unstable training, contributing to what's known as the alignment tax.

OPO addresses these issues by using exact on-policy training and introducing a theoretically optimal reward baseline that minimizes gradient variance. This results in a simpler setup with no need for value or reference models or regularization terms like KL divergence. Instead, it uses a single policy model optimized directly for expected reward.

Experiments on the DeepSeek-R1-Distill-Qwen-7B model show that OPO improves performance and stability across various math reasoning tasks. The method yields more diverse, less repetitive responses and avoids the instability and policy drift common in existing RLHF approaches.

They released their training code here:

GitHub: microsoft/LMOps/tree/main/opo