This week, we review:

⭐ MiniMax-M1: Scaling Test-Time Compute Efficiently with Lightning Attention

Optimizing Length Compression in Large Reasoning Models

Reinforcement Learning with Verifiable Rewards Implicitly Incentivizes Correct Reasoning in Base LLMs

⭐: Papers that I particularly recommend reading.

New code repositories (list of all repositories):

⭐ MiniMax-M1: Scaling Test-Time Compute Efficiently with Lightning Attention

MiniMax-M1 is a new large reasoning model (LRM) designed to scale test-time compute efficiently for extended reasoning tasks.

It is built on MiniMax-Text-01.

It introduces a hybrid Mixture-of-Experts (MoE) architecture combined with Lightning Attention, a linear attention variant optimized for I/O throughput. This design interleaves softmax-based Transformer blocks with multiple lightweight transformer layers, enabling efficient scaling to long sequences with up to one million tokens of input and 80K tokens of output. A 40k version is also available.

The model contains 456 billion total parameters with 45.9 billion active during inference. Compared to existing models like DeepSeek-R1, it achieves significant FLOP savings, under 50% at 64K token generation and about 25% at 100K tokens.

The RL training exploits a new algorithm called CISPO, which discards GRPO’s trust region constraint in favor of clipped importance sampling weights.

This approach makes full gradient utilization across all tokens, yielding empirical speedups over methods like GRPO and DAPO.

MiniMax-M1 is pretrained on a 7.5T reasoning-centric dataset and further refined via supervised fine-tuning and large-scale RL. Training tasks span verifiable domains like mathematics, logic, and software engineering, as well as unverifiable ones, including QA and creative writing.

Optimizing Length Compression in Large Reasoning Models

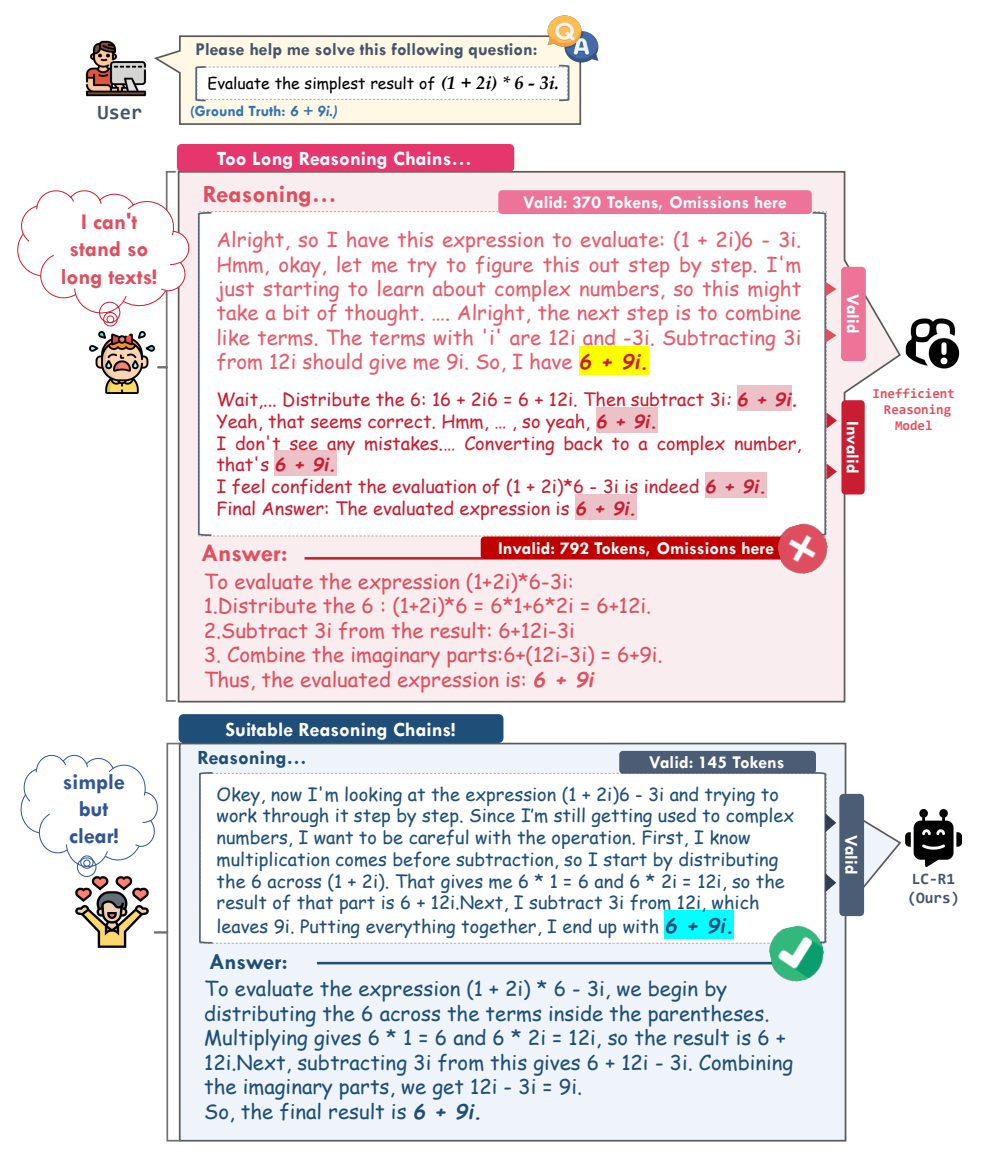

Large Reasoning Models (LRMs) like OpenAI’s O1 and DeepSeek-R1 often suffer from excessive reasoning, termed “overthinking”, where even simple problems trigger unnecessarily long and redundant reasoning chains. This leads to substantial inefficiencies in computation without added benefit to answer accuracy.

A key inefficiency comes from what the authors call “invalid thinking”: redundant self-checking after a correct answer is already derived. To address this, the paper introduces the Valid Thinking (VT) rate, a new metric to assess how much of a model’s reasoning is essential versus wasteful.

Building on this insight, the authors propose LC-R1, a GRPO-based post-training method that integrates two custom reward signals: a Length Reward for overall brevity and a Compress Reward to explicitly discourage continuation after correct solutions are found. These rewards guide the model toward reasoning that is both sufficient and concise.

Empirical results across seven reasoning benchmarks show that LC-R1 achieves up to 50% reduction in reasoning length with only a ~2% drop in accuracy, outperforming prior compression methods like SFT, DPO, ThinkPrune, and O1-Pruner. LC-R1’s reasoning remains robust across task complexity levels and retains the model’s exploration capacity.

They release their code here:

GitHub: zxiangx/LC-R1

The recent excitement around long chain-of-thought (CoT) reasoning kicked off a wave of interest in Reinforcement Learning with Verifiable Rewards (RLVR), where LLMs generate reasoning step-by-step and get feedback based on whether the final answer is correct, using deterministic checkers.

The big promise of RLVR is that it lets models "learn to think" through trial and error, kind of like teaching them to reason through experience. But there’s a problem: while RLVR often improves Pass@1, it tends to hurt or fail to improve Pass@K. This raises a question: if RLVR makes models better, why does the base model sometimes catch up or beat the RL-tuned model when we look at the top-K outputs?

A popular hypothesis says RLVR doesn’t actually teach new reasoning paths. It just samples the already-good ones more efficiently, but narrows the model’s overall output diversity. But this paper challenges that idea. Instead, the authors argue RLVR does incentivize new and better reasoning. It’s just that metrics like Pass@K are misleading. Sometimes, base models get lucky and guess the right answer without valid reasoning.

To fix this, they propose a new metric called CoT-Pass@K, which only counts outputs where both the answer and the reasoning steps are correct. Under this metric, RLVR actually looks great. It consistently improves reasoning quality across all values of K, not just the top-1 output.

They back this up with a theoretical explanation and experiments using automated CoT verifiers (based on DeepSeek-R1-0528-Qwen3-8B) and show that correct reasoning patterns start emerging early in RL training. This new view reframes RLVR as a way to train models to reason well, and not just guess well, and helps explain why previous metrics may have masked its actual benefits.