This week, we read:

⭐Tensor Product Attention Is All You Need

Evaluating Sample Utility for Data Selection by Mimicking Model Weights

Towards Best Practices for Open Datasets for LLM Training

⭐: Papers that I particularly recommend reading.

New code repositories (list of all repositories):

Tensor Product Attention Is All You Need

Managing long sequences during LLM inference poses significant computational and memory challenges, particularly due to the storage of key-value (KV) caches. The KV cache’s Memory consumption scales linearly with sequence length, limiting the maximum context window within the constraints of current hardware.

Several approaches have been explored to address this memory bottleneck. Token compression and pruning strategies, such as sparse attention patterns and token eviction, can reduce cached states but risk discarding important tokens. KV cache quantization is also an option.

Offloading the KV cache reduces memory usage at the cost of increased I/O latency.

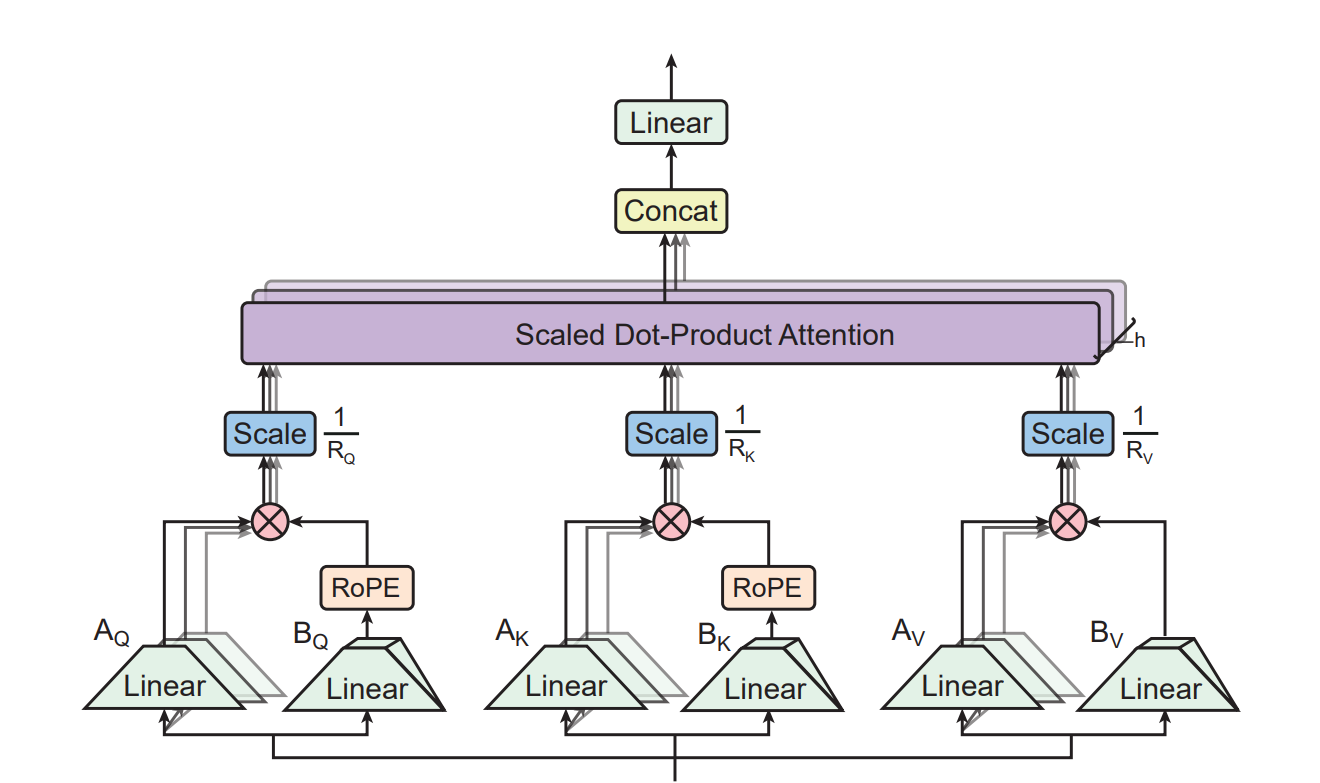

To overcome these limitations, this paper introduces Tensor Product Attention (TPA), a new mechanism that factorizes queries (Q), keys (K), and values (V) using contextual tensor decompositions during attention computation. TPA dynamically constructs low-rank, contextual representations, achieving significant memory savings while enhancing representational capacity. It reduces KV cache memory usage by an order of magnitude compared to standard multi-head attention (MHA) and improves performance metrics, such as pretraining validation loss (perplexity).

TPA integrates natively with RoPE. The authors propose the Tensor ProducT ATTenTion Transformer (T6), a TPA-based architecture that demonstrates improved language modeling performance, reduced KV cache size, and better downstream evaluation results. TPA unifies existing attention mechanisms by showing that MHA, MQA, and GQA can be derived as non-contextual variants of TPA.

This work could be very impactful and hopefully, these advantages will be confirmed in real-world applications.

They released their code here:

GitHub: tensorgi/T6

Evaluating Sample Utility for Data Selection by Mimicking Model Weights

Large-scale web-crawled datasets often include noise, biases, and irrelevant content. To address this, data filtering techniques refine raw web content to enhance model performance, as seen in datasets like FineWeb.

However, current filtering methods rely on handcrafted heuristics, domain expertise, and expensive experimentation. These methods often lack fine-grained insights into individual samples and their utility during training, leading to coarse filtering and suboptimal results.

Existing techniques for data selection often involve semantic similarity measures, specialized filtering networks, or training influence models. These approaches add complexity and dependencies on additional datasets or specialized training.

To overcome these challenges, this paper introduces the Mimic Score, a new data quality metric that evaluates a sample’s contribution to weight updates using a pre-trained reference model. By leveraging the alignment of a sample’s gradient with the direction toward the reference model in weight space, Mimic Score avoids the need for downstream datasets or specialized training and serves as a selection guide.

Building on the Mimic Score, the paper presents Grad-Mimic, a two-stage automated data selection framework:

During Training: Grad-Mimic prioritizes high-value samples using Mimic Score, steering the model toward the reference model’s weight space.

After Training: Grad-Mimic assesses sample utility across training steps and aggregates these insights for ensemble filtering.

Experimental validation highlights Grad-Mimic’s effectiveness:

In controlled scenarios with label noise, Grad-Mimic identifies mislabeled samples and provides accurate dataset quality assessments, improving model performance across six image datasets.

When applied to large-scale web-crawled data, Grad-Mimic enhances performance for CLIP models trained from scratch on datasets with 10 million and 100 million samples. Using public CLIP weights as a reference, the framework refines datasets more effectively than human-designed filters, achieving higher performance with fewer data samples. Mimic scores also complement CLIP score-based filters by removing low-value samples.

⭐Towards Best Practices for Open Datasets for LLM Training

The development of AI systems depends heavily on the datasets used to train LLMs, making transparency in data practices essential for accountability and innovation. However, leading AI companies, such as OpenAI, Anthropic, Google, and Meta, have become less open about their datasets compared to earlier efforts like Google’s T5 or Meta’s LLaMA (the first). Criticism over exploitative data practices, especially from literary and creative communities, and multiple copyright lawsuits have further discouraged companies from disclosing data sources.

In contrast, an ecosystem of open LLM developers is working toward greater transparency and open access to training data. This effort was highlighted during the June 2024 Dataset Convening, co-hosted by Mozilla and EleutherAI, which brought together 30 experts to discuss challenges and opportunities in creating open-access and openly licensed datasets. Using case studies such as EleutherAI’s forthcoming Common Pile and YouTube-Commons, the group identified seven guiding principles:

Foster a competitive LLM ecosystem.

Enable accountability and transparency through reproducibility.

Minimize harms and enable preference signals.

Support and improve diversity.

Strive for reciprocity.

Collaborate with like-minded actors.

Preserve data for the long term.

This non-technical paper outlines challenges similar to those faced by the early open-source software movement, such as data quality, standardization, and sustainability, often reliant on community contributions. Open datasets can serve as public goods, for innovation, accountability, and scrutiny.

While not exhaustive, this paper aims to establish a shared reference point for the open LLM ecosystem, capture emerging consensus, and highlight key challenges and open questions in advancing open datasets for AI training.