Reviewed this week:

⭐Why Does the Effective Context Length of LLMs Fall Short?

Aligning Large Language Models via Self-Steering Optimization

SemiEvol: Semi-supervised Fine-tuning for LLM Adaptation

Can Knowledge Editing Really Correct Hallucinations?

⭐: Papers that I particularly recommend reading.

New code repositories (list of all repositories):

Why Does the Effective Context Length of LLMs Fall Short?

Recent LLMs like Llama 3.1 now support a context length of 128K tokens, which is a massive improvement over earlier versions. These advancements aim to expand the practical applications of LLMs, but there remain challenges in effectively using these extended contexts.

Despite longer training context lengths, many LLMs show a noticeable gap between their theoretical and practical performance. Studies indicate that the effective context length, or the model’s actual ability to use long inputs, often falls short of the training limits. For instance, Llama 3.1's effective context length is only 64K, despite being designed and trained to handle much more.

The authors have identified a key problem in training these models: a left-skewed position frequency distribution. This means that positions representing distant parts of the input text are undertrained, resulting in poor handling of long-range dependencies. This pattern was observed in pre-training datasets like SlimPajama, where the frequency of distant positions drops significantly.

To address these issues, the authors propose a novel approach called ShifTed Rotray position embeddING (STRING). STRING adjusts the use of position indices by shifting them towards areas in the model where they have been more frequently trained.

This method compensates for the undertrained distant positions, improving the model’s ability to understand long-range relationships without adding computational complexity. STRING was implemented using Flash Attention and combined two key techniques: sliding window attention and a targeted shift in position indices.

The authors released their code here:

GitHub: HKUNLP/STRING

Aligning Large Language Models via Self-Steering Optimization

Instruct LLMs are trained to be aligned with human preferences. This alignment has made possible the development of successful products like ChatGPT, but aligning LLMs effectively is a complex task.

Despite the use of algorithms like Proximal Policy Optimization (PPO) and Direct Preference Optimization (DPO), these alignment methods rely heavily on reward models that differentiate between preferred and rejected responses. These models require large amounts of annotated preference data and frequent updates to prevent reward hacking. It makes the process resource-intensive and prone to errors due to human limitations.

The authors have been exploring automated alignment approaches to replace human-annotated signals with scalable alternatives. Current methods involve either using the model to judge responses or generating responses based on predefined rules. However, these strategies often suffer from reward inaccuracies and off-policy outputs, which compromise the quality of alignment.

To address these limitations, they propose a new approach called Self-Steering Optimization (SSO). SSO focuses on generating automated, accurate, and on-policy preference signals directly from the policy model. It prompts the model with queries and contrasting principles, steering it towards optimal responses without additional inputs. The method aims to maintain the accuracy of these signals as training progresses, allowing the model to refine its learning independently.

Experiments with SSO on models like Qwen2 and Llama 3.1 showed continuous improvements across multiple benchmarks, both objective (e.g., GPQA, MATH) and subjective (e.g., MT-Bench). SSO achieved these results without relying on human annotations, even outperforming baseline models that used annotated data.

The authors released their code here:

GitHub: icip-cas/SSO

SemiEvol: Semi-supervised Fine-tuning for LLM Adaptation

Supervised fine-tuning (SFT) is essential for improving LLMs on specific tasks or domains, but it requires large amounts of labeled data, which can be costly. While unsupervised pre-training is common, it requires a lot of data and computational resources, making it often impractical. In real-world applications, a mix of small labeled datasets and larger pools of unlabeled data is common. This presents an opportunity to leverage the labeled data to improve performance on the unlabeled data, but doing so effectively poses challenges.

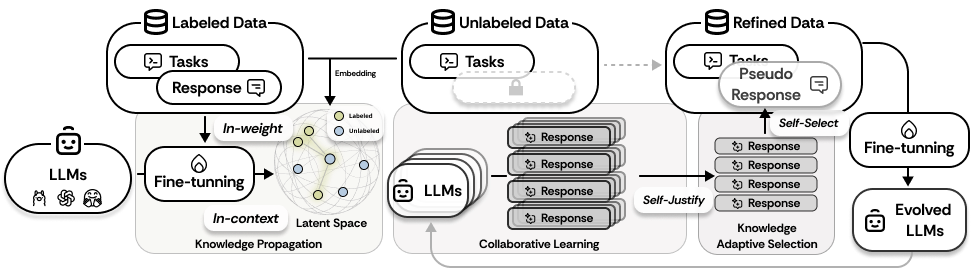

To address this, the authors introduce SEMIEVOL, a framework designed to improve LLM reasoning in these hybrid-data scenarios. SEMIEVOL employs a bi-level strategy focused on two key areas: knowledge propagation and data selection. For knowledge propagation, it uses labeled data to adapt the model both through weight adjustments and in-context learning, where it employs k-nearest neighbor retrieval to aid predictions. For data selection, SEMIEVOL uses multiple LLMs to collaboratively generate and self-justify responses then selects high-quality unlabeled samples based on confidence measures such as response entropy. This combination generates pseudo-responses from the unlabeled data, used to improve the model’s performance on target tasks.

The authors released their code here:

GitHub: luo-junyu/SemiEvol

Can Knowledge Editing Really Correct Hallucinations?

LLMs have demonstrated strong capabilities in many tasks, but they often produce hallucinations or inaccurate information. This problem occurs because LLMs have limited internal knowledge and struggle with fast-changing facts.

Since retraining these models from scratch is expensive, researchers have developed knowledge editing methods to update and correct their knowledge. However, existing datasets like WikiDatarecent, ZsRE, and WikiBio do not ensure that LLMs generate hallucinations before editing. As a result, it is hard to evaluate how well these editing techniques correct misinformation because these datasets do not effectively measure the presence of hallucinations beforehand.

This study using Llama 2 7B highlights this issue. The model performed well on these datasets even before applying knowledge editing, which led to misleading conclusions about the effectiveness of different editing methods. To address this, the authors created HalluEditBench, a benchmark to test how well knowledge editing techniques handle real-world hallucinations. HalluEditBench includes data from nine domains and 26 topics and tests three LLMs (Llama 2 7B, Llama 3 8B, and Mistral-v0.3-7B). The benchmark evaluates each technique through five key dimensions: Efficacy, Generalization, Portability, Locality, and Robustness.

According to the experiments, current knowledge editing methods might not be as effective as their high scores on existing datasets suggest. For instance, techniques like FT-M and MEMIT achieved near-perfect scores on those datasets, but their Efficacy Scores in HalluEditBench were much lower, indicating they struggle to fix real-world hallucinations. No single technique outperformed others across all five dimensions. ICE and GRACE were the best at improving Efficacy but had weaknesses in other areas. Generalization performance showed little to no improvement across most techniques, and some even performed worse than unedited models in terms of Portability. Moreover, the effectiveness of these techniques varied significantly between different domains and LLMs, underscoring the complexity of correcting hallucinations reliably.