This week, we read:

⭐A Refined Analysis of Massive Activations in LLMs

Expanding RL with Verifiable Rewards Across Diverse Domains

AdaptiVocab: Enhancing LLM Efficiency in Focused Domains through Lightweight Vocabulary Adaptation

Gemma 3 Technical Report

⭐: Papers that I particularly recommend reading.

New code repositories (list of all repositories):

⭐A Refined Analysis of Massive Activations in LLMs

This very interesting paper studies the curious behavior in LLMs called “massive activations”, basically, a few neurons firing with values way bigger than the rest. These aren’t just numerical outliers; they actually act like implicit bias terms, subtly steering the model’s attention toward certain tokens. What’s interesting is that this happens across different LLMs, not just one architecture.

Despite being common, massive activations haven’t been studied very consistently. Some papers focus only on models like LLaMA or GPT-2, and many experiments skip including a BOS (beginning-of-sentence) token by default, which can affect results. For instance, in Gemma-3, these high activations caused float16 overflows during inference and fine-tuning, values went beyond 65,536, hitting infinity or NaNs, mostly because of how layer norms stacked up in the decoder.

I ran an empirical study showing that Gemma 3, indeed, doesn’t work with float16. We have to load the model with bfloat16, which has a much larger range.

The problem also shows up during quantization, especially in models using GLU (Gated Linear Unit) variants in their feedforward layers. These activation spikes tend to cluster in early and late layers, and mostly for specific tokens, not spread out across the whole sequence. That leads to big quantization errors locally, messing with the performance of the compressed model. This is something that I often observe with AutoRound quantization. Intel recommends skipping the problematic layers, but fancier methods are available, like Quantization-free Modules (QFeM) and Prefixes (QFeP).

This paper takes it further by analyzing massive activations across a broader range of LLMs—GLU and non-GLU alike—and benchmarks different mitigation strategies like Attention KV bias, Target Variance Rescaling (TVR), and Dynamic Tanh (DyT). The focus isn’t just on reducing those large values, but also on making sure the model’s performance and attention behavior don’t suffer in the process.

The code is available here:

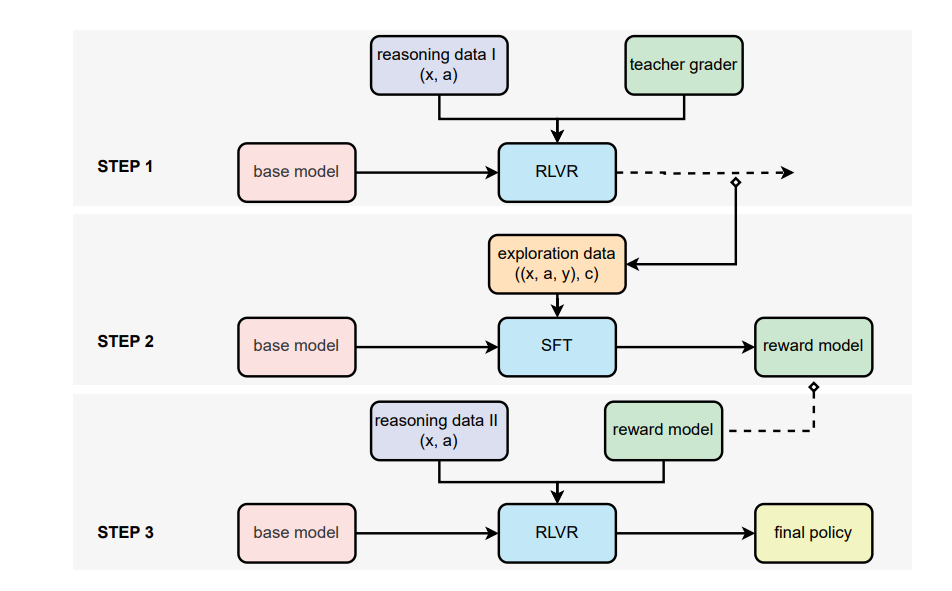

Expanding RL with Verifiable Rewards Across Diverse Domains

This paper explores how Reinforcement Learning with Verifiable Rewards (RLVR) can be extended beyond traditional domains like math and coding to improve complex reasoning in LLMs across diverse fields such as medicine, chemistry, and psychology.

Note: RLVR was first proposed with TULU 3. We studied it here:

RLVR typically relies on binary rewards based on whether a model’s output matches a known correct answer. While effective for structured tasks with clear reference answers, this is much more complicated for open-ended domains.

The authors find that using high-quality, expert-written reference answers allows for consistent evaluation across domains, with strong agreement between both closed- and open-source models. This suggests that reference-based grading is more straightforward and reliable than reference-free evaluation, which requires identifying subtle mistakes in reasoning.

To overcome the limitations of binary scoring, the paper introduces model-based soft rewards, where a small LLM is trained to act as a generative verifier. Instead of strict pass/fail labels, the verifier outputs probabilities that reflect the confidence in a response's correctness. This approach improves robustness and scalability, even without domain-specific reward model annotations.

Experiments show that using this method, a 7B model trained with RLVR outperforms much larger models (like Qwen2.5-72B) by up to 8% accuracy on free-form tasks across multiple domains. Moreover, the model generalizes well and benefits from increasing training data, unlike rule-based reward systems that struggle with unstructured answers.

AdaptiVocab: Enhancing LLM Efficiency in Focused Domains through Lightweight Vocabulary Adaptation

This paper introduces AdaptiVocab, an end-to-end method for adapting the vocabulary of LLMs to low-resource, domain-specific settings. LLMs rely on tokenization, where each token is mapped to a high-dimensional vector and passed through attention layers. Since token count directly affects memory and computation, optimizing the vocabulary can improve efficiency, especially in generative models, where each token requires a separate forward pass.

AdaptiVocab addresses this by replacing low-utility general-purpose tokens with high-frequency, domain-relevant multi-word tokens. It works on top of any tokenizer and model, using a frequency-based scoring method to identify candidate n-tokens and a novel embedding initialization technique based on exponential weighting. The adaptation process is lightweight, requiring only a few hours on a single GPU and fine-tuning only a small subset of model layers.

The method was tested on Mistral-7B and Llama-2 7B across three niche domains—Earth Sciences, History of Physics, and Games and Toys—showing over 25% reduction in token usage without sacrificing performance. Evaluations using human judgment and LLM-based metrics confirmed that generation quality and end-task accuracy were preserved or improved.

They will release their code here:

GitHub: itay-nakash/AdaptiVocab

Compared to Gemma 2, Gemma 3 introduces multimodality, long-context handling (up to 128K tokens), and enhanced multilingual capabilities, all while matching or exceeding the performance of previous versions.

For image input, Gemma 3 uses a customized SigLIP vision encoder, turning images into soft token sequences and compressing them into 256-vector embeddings to reduce inference cost. It also supports flexible image resolutions using a Pan and Scan method inspired by LLaVA.

To handle long contexts efficiently, Gemma 3 uses an architectural trick: inserting several local attention layers (each with a 1024-token span) between global ones, with one global layer for every five local ones. This reduces memory usage during inference without sacrificing performance.

Training-wise, the model sticks to a refined Gemma 2 recipe, using the same tokenizer as Gemini 2.0, but with a rebalanced data mix for better multilingual and image understanding. All models are trained with knowledge distillation.

Post-training focuses on boosting math, reasoning, coding, and chat skills, using a new method that improves performance across all tasks. The final instruction-tuned Gemma 3 models are described as both powerful and flexible, significantly outperforming earlier versions.

If you are interested in fine-tuning Gemma 3, have a look here: